Text may contain bad spelling, incorrect expressions, verbal turns, sentence constructions, etc.

Most likely, you’re getting noise or just a blank instead of a beautiful image when trying to generate with the Flux model in Stable Diffusion web UI by AUTOMATIC1111.

Or maybe you’re get error “You do not have CLIP state dict!” when using the Flux model on another panel.

And that’s probably why you’re reading this.

Not tutorial on setting up local installation for image generation.

If you’re getting noise or blank image, that means your panel doesn’t support Flux models.

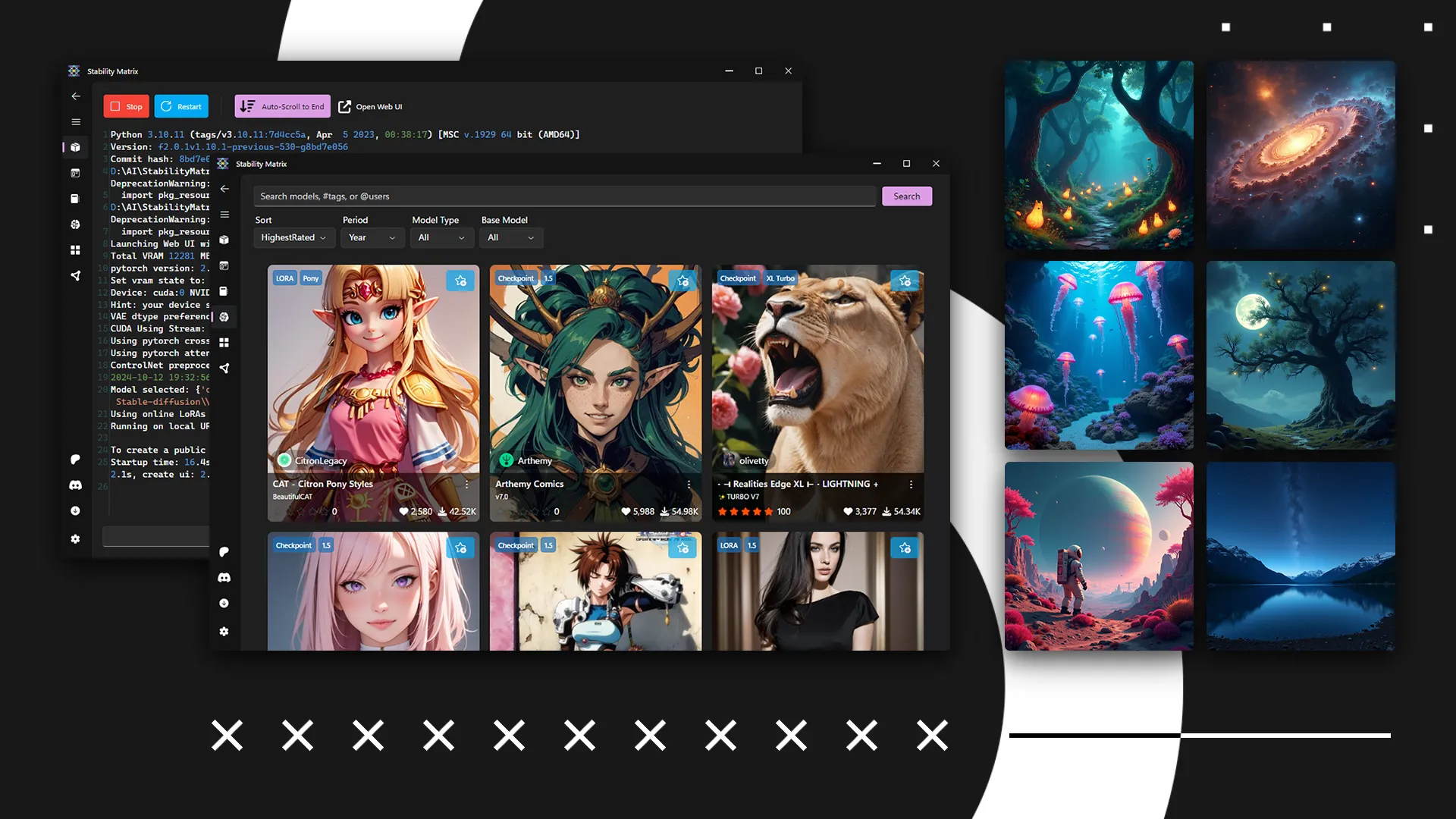

As of the time of writing, Flux works in WebUI Forge and ComfyUI, both of which are listed in the Stability Matrix. Also, for now, Flux models work best with NVIDIA GPUs.

When running Flux models (or any other models based on Flux), you might run into this error:

| |

This happens because the text encoder is missing. Someone refer to it as CLIP, though technically they’re slightly different things.

To make Flux model running, you’ll need to download the encoders and VAE.

Download encoders, clip-l* and t5* from here.

Whether you should use fp8 or fp16 depends on your hardware.

If you have low VRAM (4-8GB) and limited RAM (8-16GB), use fp8.

If you have mid-range VRAM (11-12GB) and a lot of RAM (32GB+), you can use fp16.

If you have plenty of everything, especially VRAM (20GB+), just go with fp16.

Now, download the VAE files: vae/diffusion_pytorch_model.safetensors and ae.safetensors from here or here.

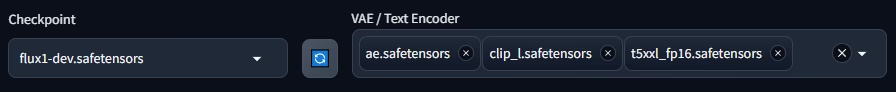

Place VAE into models\VAE, and encoders into models\text_encoder or models\CLIP (if you’re using Stability Matrix).

Now all that’s left is to load the VAE and encoders from the menu.

You can load them in any order.

For more details, check out this discussion from the WebUI Forge developer.